Redis Pings Then Expire Over and Over Again

High-Concurrency Practices of Redis: Snap-Up System

Past Qianyi

Contents

1. I/O Models and Their Problems

ii. Resources Contention and Distributed Locks

3. Redis Snap-Upwards Organisation Instances

ane. I/O Models and Their Problems

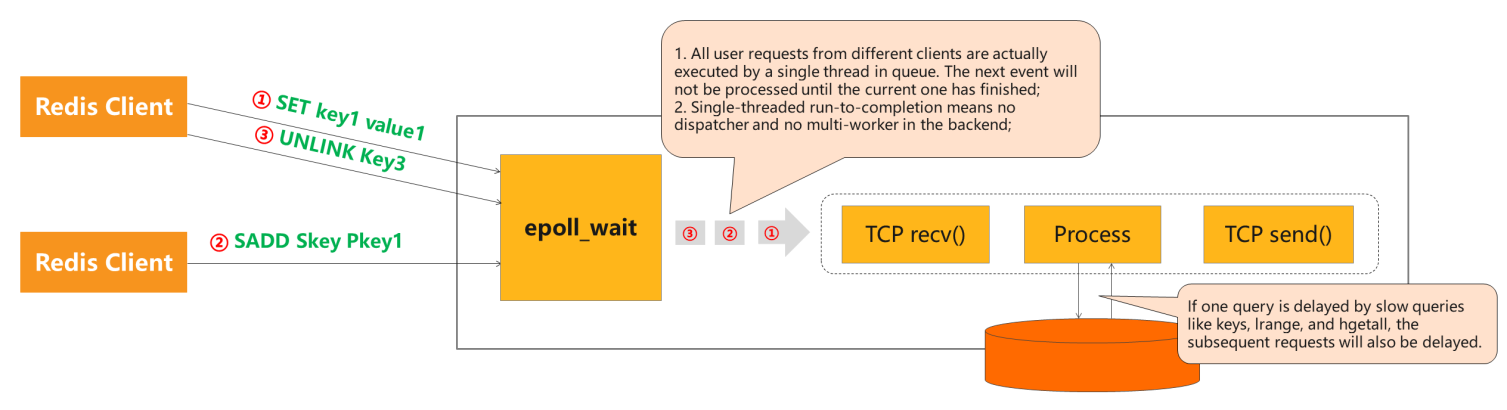

1) Run-to-Completion in a Solo Thread

The I/O model of Redis Customs Edition is simple. More often than not, all commands are parsed and processed by i I/O thread.

However, if there is a dull query command, other queries accept to queue up. In other words, when a client runs a control too slowly, subsequent commands will be blocked. Sentry revitalization cannot help since it volition delay the ping command, which is as well affected by slow queries. If the engine gets stuck, the ping control volition neglect to determine whether the service is currently available for fear of misjudgment.

If the service does not respond and the Master is switched to the Slave, slow queries too will slow down the Slave. The misjudgment may as well be made by ping command. Equally a result, it is difficult to monitor service availability.

Summary

- All user requests from different clients are executed past a single thread in queue. The side by side event will not be processed until the current 1 has finished.

- Single-threaded run-to-completion means no dispatcher and no multi-worker in the backend.

If ane query is delayed past boring queries, such as keys, lrange, and hgetall, the subsequent requests will besides be delayed.

Defects of Using Watch for Revitalization

- The Ping Command Misjudgment: The ping control is also afflicted by slow queries and will fail if the engine gets stuck.

- Duplex Failure: Lookout man will fail when processing other slow queries after Principal/Slave switchover due to the preceding slow query.

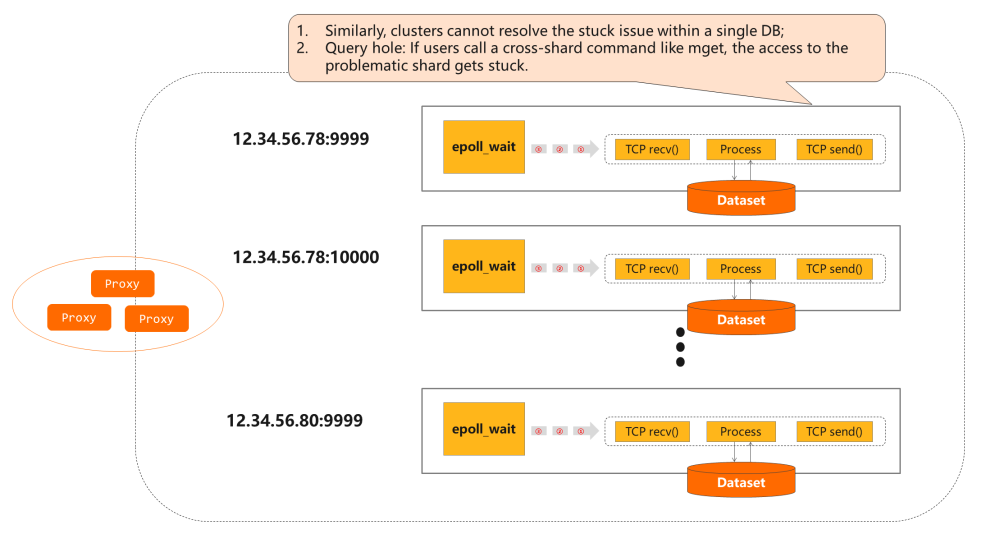

2) Go far a Cluster

This trouble as well exists in a cluster containing multiple shards. If a ho-hum query delays processes in a shard, for example, calling a cantankerous-shard command like mget, the access to the problematic shard gets stuck. Thus, all subsequent commands are blocked.

Summary

- Similarly, clusters cannot resolve the stuck issue within a unmarried DB.

- Query Hole: If users call a cross-shard control like mget, the access to the problematic shard gets stuck.

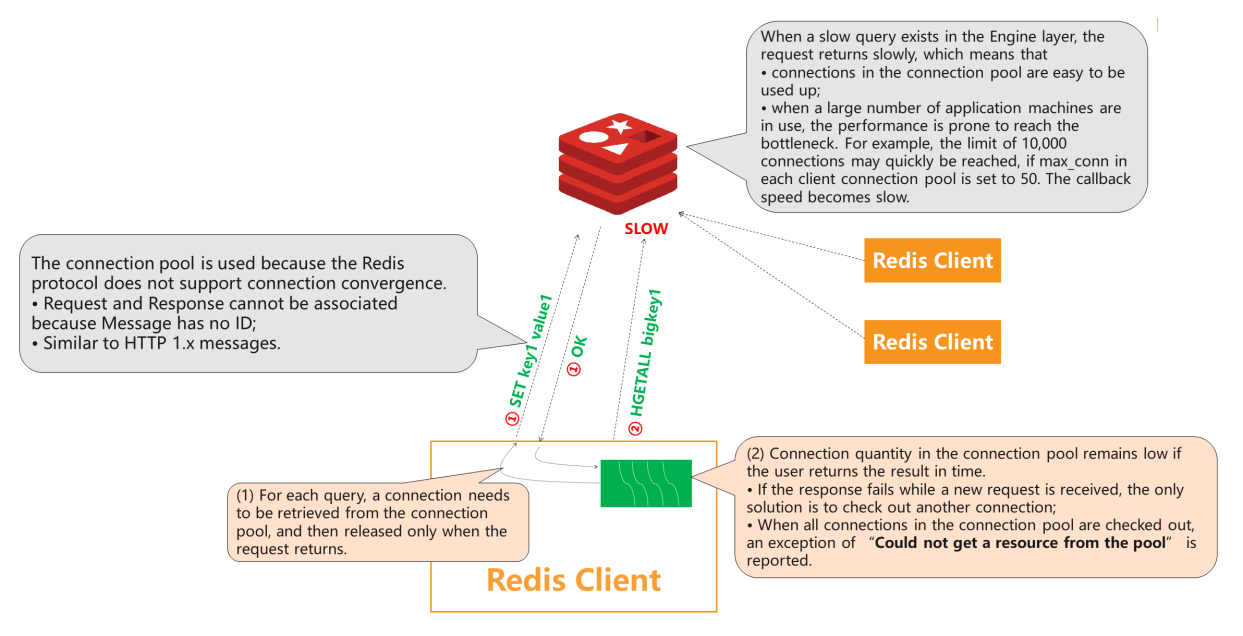

3) "Could Not Go a Resource from the Puddle"

Mutual Redis clients similar Jedis provide connection pools. When a service thread accesses Redis, a persistent connection volition be retrieved for each query. If the query is candy too slowly to return the connectedness, the waiting fourth dimension will be prolonged because the connection cannot be used by other threads until the request returns a result.

If all queries are candy slowly, each service thread retrieves a new persistent connection, which will be consumed gradually. If this is the example, an exception of "no resources bachelor in the connection puddle" is reported considering the Redis server is single-threaded. When a persistent connection of the client is blocked by a tiresome query, requests from subsequent connections volition non be processed in time. The current connectedness cannot be released back to the connection pool.

Connection convergence is not supported by the Redis protocol, which deprives the Bulletin of an ID. Thus, Asking and Response cannot be associated. Therefore, the connectedness pool is used. The callback is put into a queue unique for each connection when a asking is received to implement asynchronization. The callback tin can be retrieved and executed after the asking returns. This is the FIFO model. However, server connections cannot be returned out of order considering they cannot be matched in the client. Generally, the customer uses BIO, which blocks a connection and only allows other threads to use information technology after request returns.

However, asynchronization cannot improve efficiency, which is limited by the single-threaded server. Fifty-fifty if the client modifies the access method to allow multiple connections to send requests at the same fourth dimension, these requests still accept to queue upwardly in the server. Irksome queries nevertheless cake other persistent connections.

Another serious problem comes from the Redis thread model. Its operation will lower when the I/O thread handles with more than 10,000 connections. For example, if at that place are 20,000 to 30,000 persistent connections, the functioning volition exist unacceptable for the business. For business machines, if there are 300 to 500 machines and each i handles 50 persistent connections, information technology is like shooting fish in a barrel to reach the performance clogging.

Summary

The connection pool is used because the Redis protocol does not support connection convergence.

- Asking and Response cannot be associated because Message has no ID.

- Information technology is similar to HTTP i.ten letters.

When a slow query exists in the Engine layer, the request returns slowly, which ways:

- Connections in the connection pool tin can be depleted easily.

- When a large number of application machines are in employ, the operation is decumbent to reach the bottleneck. For example, the limit of 10,000 connections may be reached quickly. If

max_connin each customer connection pool is set to 50, the callback speed becomes ho-hum.

- For each query, a connection needs to be retrieved from the connectedness pool and only released when the request returns.

- Connection quantity in the connection pool remains low if the user returns the result in time.

- If the response fails while a new asking is received, the only solution is to bank check out another connection.

- When all connections in the connection puddle are checked out, an exception of "Could not go a resources from the pool" is reported.

Here are some solutions for asynchronous interfaces implementation based on the Redis protocol:

- As mentioned above, a connection can be assigned with a callback queue. The callback is put into the queue before asynchronous requests are sent and retrieved after the result is returned. This is a common method, generally implemented by mainstream clients that support asynchronous requests.

- There are also some catchy methods, like using the Multi-Exec and ping commands to encapsulate the request. For example, call the

set k fivecommand to encapsulate a asking in the following form:

multi ping {id} set g 5 exec The server will render the post-obit code:

{id} OK This tricky method uses atomic operations of Multi-Exec and the unmodified parameter return feature of the ping command to "attach" bulletin IDs in the protocol. Even so, this method is fundamentally not used by any customer.

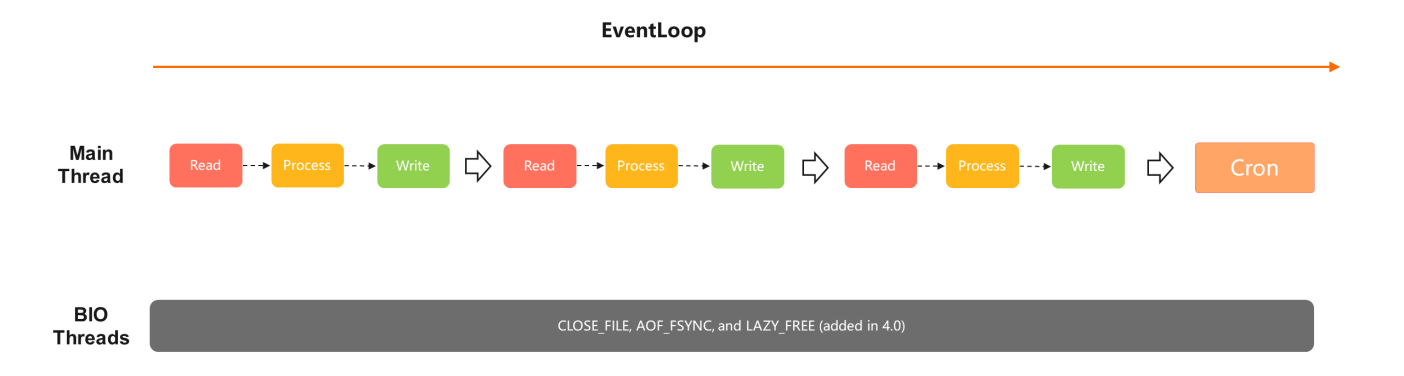

four) Redis ii.x/4.x/5.ten Thread Models

The model of well-known versions before Redis five.10 remains the same. All commands are processed by a single thread, and all reads, processes, and writes run in the same main I/O. There are several BIO threads that shut or refresh files in the background.

In versions later than Redis 4.0, LAZY_FREE is added, allowing certain big keys to release asynchronously to avoid blocking the synchronous processing of tasks. On the reverse, Redis 2.viii will go stuck when eliminating or expiring large keys. Therefore, Redis iv.0 or subsequently is recommended.

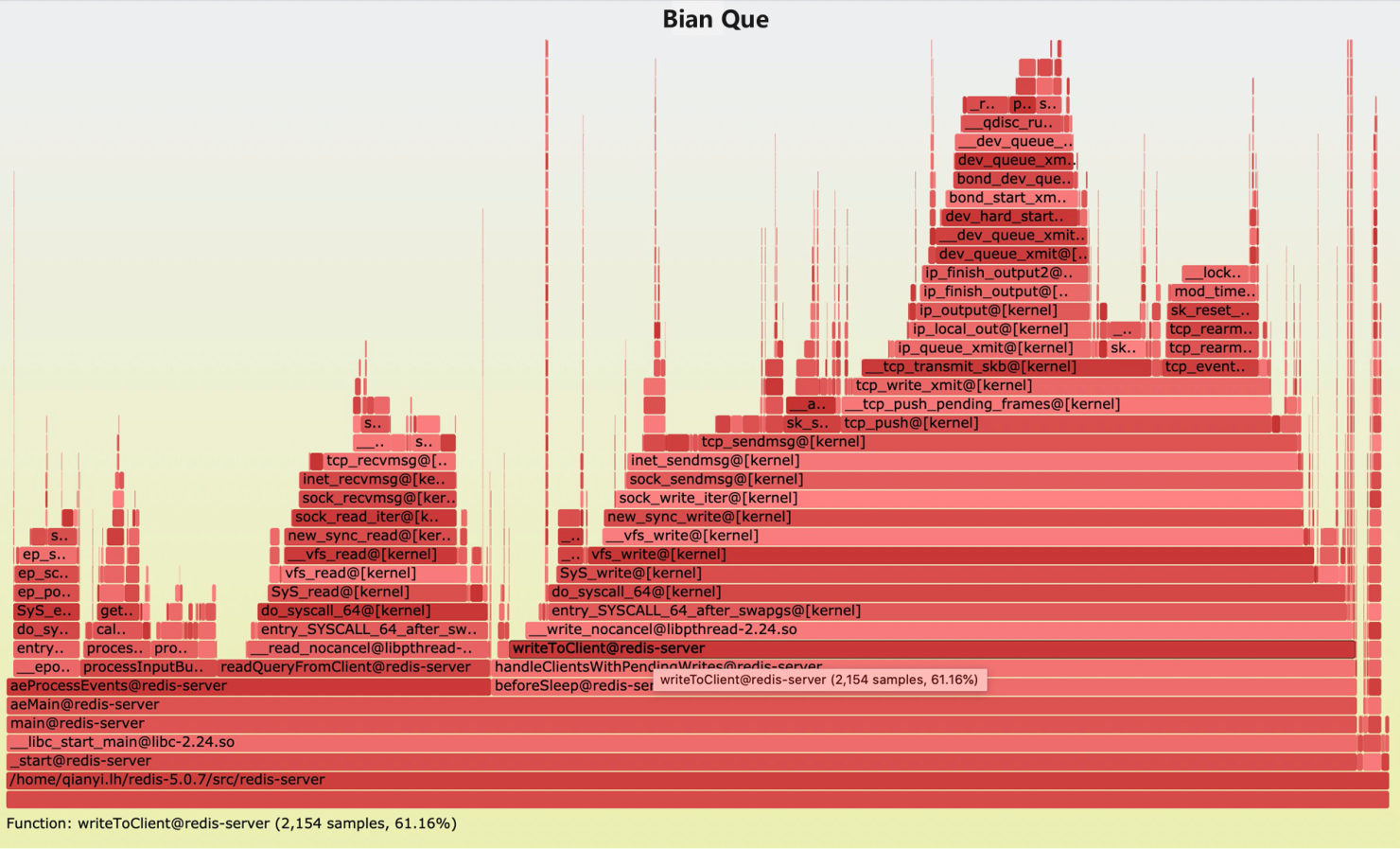

five) Flame Graph of Redis 5.x

The following effigy shows the performance analysis result. The left two parts are about command processing, the middle function talks about "reading," and the rightmost part stands for "writing," which occupies 61.sixteen%. Thus, nosotros tin can tell that most of the operation depends on the network I/O.

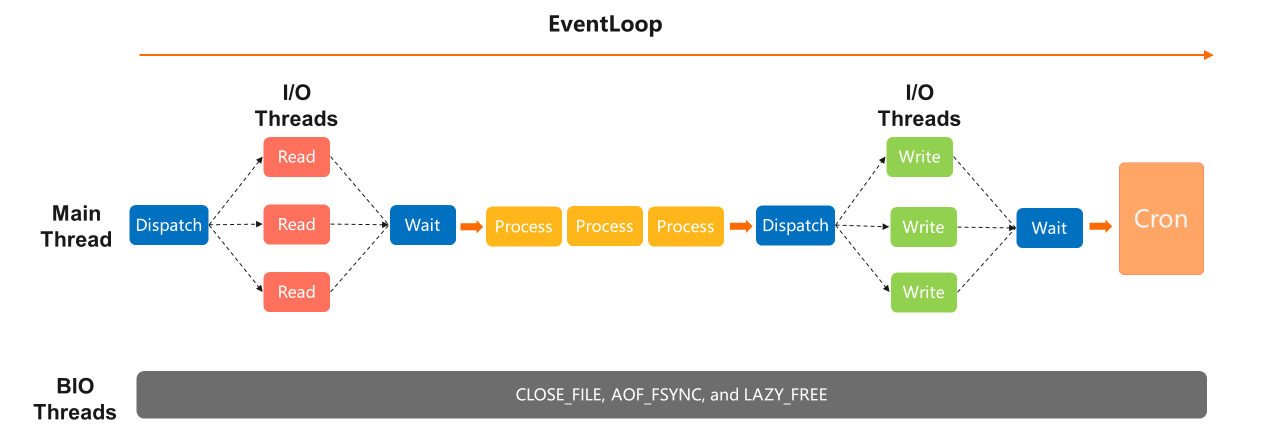

half dozen) Redis half dozen.x Thread Model

With the improved model of Redis 6.x, the "reading" task can be delegated to the I/O thread for processing after readable events are triggered in the main thread. After the reading task finishes, the issue is returned for further processing. The "writing" task can also be distributed to the I/O thread, which improves the operation.

Improvement in the performance tin exist actually impressive with O(1) commands similar simple "reading" and "writing" if just one running thread exists. If the command is complex while simply i running thread in DB exists, the comeback is rather limited.

Another problem lies in time consumption. Every "reading" and "writing" task needs to wait for the issue afterward being distributed. Therefore, the principal thread will idle for a long time, and service cannot be provided. Therefore, more improvements of the Redis vi.x model are expected.

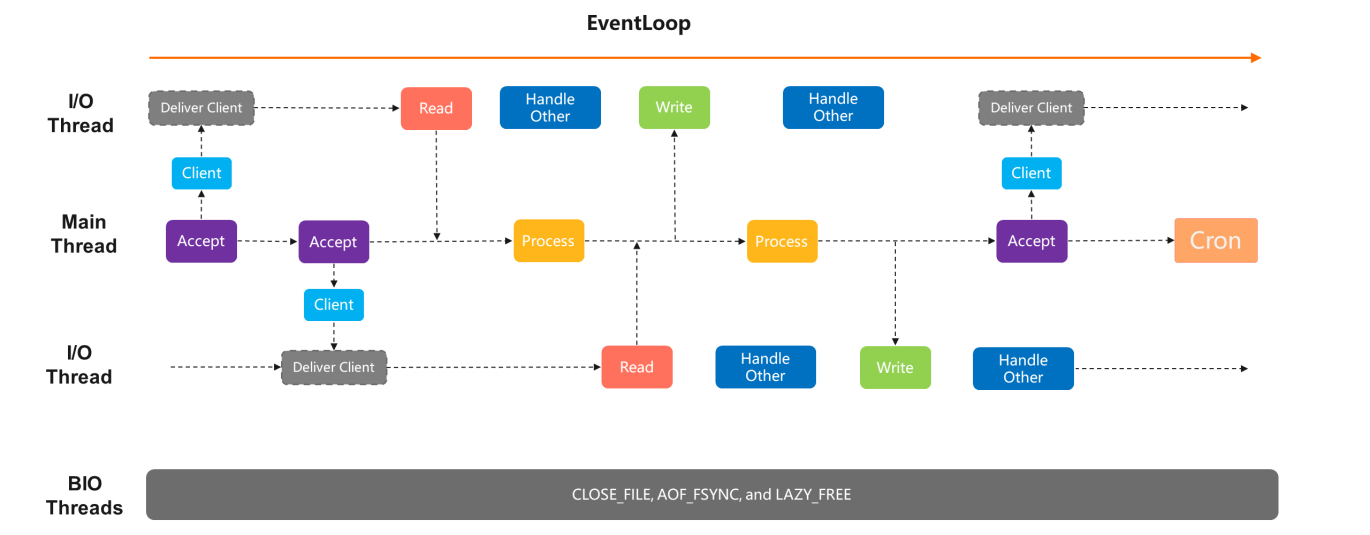

7) Thread Model of Alibaba Cloud Redis Enterprise Edition (Performance-Enhanced Tair)

The model of Alibaba Cloud Redis Enterprise Edition splits the unabridged upshot into parts. The principal thread is only responsible for control processing, while all reading and writing tasks are candy by the I/O thread. Connections are no longer retained only in the primary thread. The master thread simply needs to read once afterwards the event starts. After the client is continued, the reading tasks are handed over to other I/O threads. Thus, the main thread does not care almost readable and writable events from clients.

When a command arrives, the I/O thread volition forward the command to the principal thread for processing. Later, the processing result will be passed to the I/O thread through notification for writing. Past doing so, the waiting time of the main thread is reduced equally much equally possible to enhance the performance further.

The same disadvantage applies. But one thread is used in control processing. The improvement is nifty for O(1) commands only not enough for commands that swallow many CPU resource.

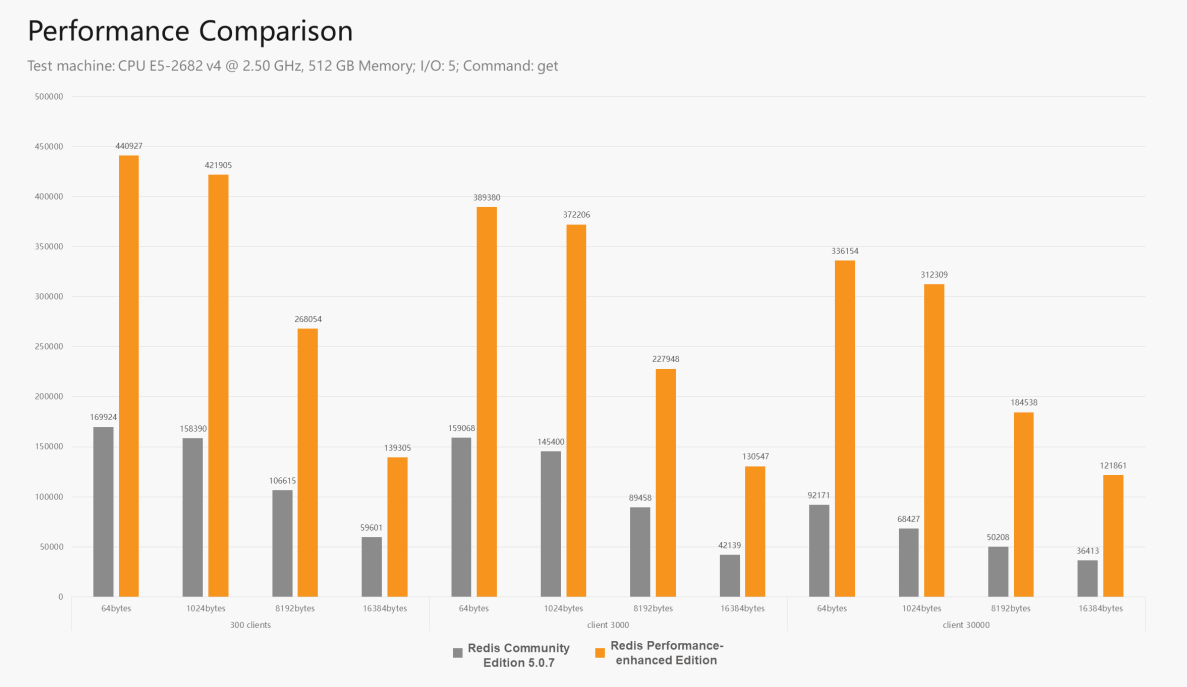

8) Performance Comparison Test

In the following figure, the grey color on the left stands for Redis Community Edition v.0.vii, and the orange color on the right stands for Redis Operation-Enhanced Edition. The multi-thread operation of Redis 6.x is in between. In the test, the reading command is used, which requires more on I/O rather than CPU. Therefore, the operation comeback is smashing. If the command used consumes many CPU resources, the difference between the two editions will reduce to none.

Information technology is worth mentioning that the Redis Community Edition seven is in the planning phase. Currently, information technology is being designed to adopt a modification scheme like to the one used past Alibaba Cloud. With this scheme, it can gradually arroyo the performance bottleneck of a single principal thread.

Performance improvement is simply one of the benefits. Another benefit of distributing connections to I/O threads is that information technology linearly increases the number of connections. Yous can add I/O threads to deal with more connections. Redis Enterprise Edition supports tens of thousands of connections by default. Information technology can fifty-fifty support more than connections, such equally 50,000 or sixty,000 persistent connections, to solve the issue of insufficient connections during the big-scale machine scaling at the business layer.

two. Resource Contention and Distributed Lock

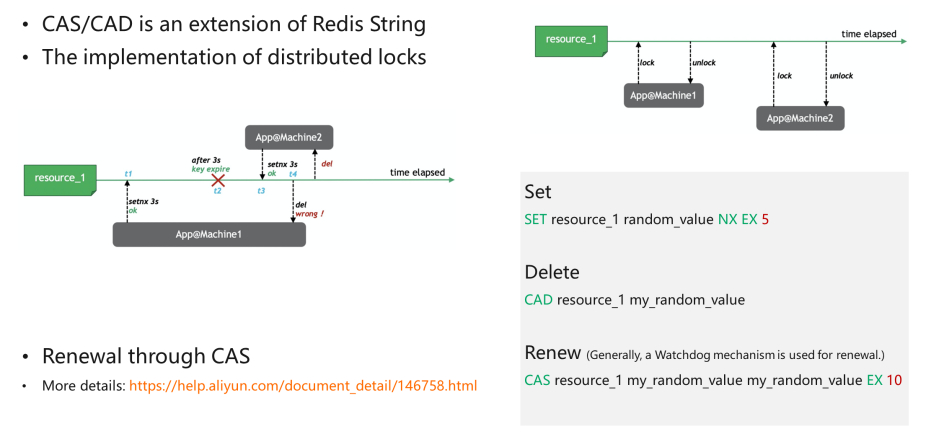

1) CAS/CAD Loftier-Operation Distributed Lock

The write control in Redis cord has a parameter called NX, which specifies that a string can be written if no string exists. This is naturally locked. This feature facilitates the lock operation. By taking a random value and setting it with the NX parameter, atomicity can be ensured.

An "EX" is added to gear up an expiration time to ensure the lock will be released if the business organization machine fails. If a lock is non released afterward the machine fails or is disabled for some reason, information technology volition never be unlocked.

The parameter "5" is an instance. It does not have to be v seconds, but information technology depends on the specific tasks to be done past the business machine.

The removal of distributed locks can be troublesome. Here'due south a case. A locked machine sticks or loses contact due to some sudden incidents. V seconds later, the lock has expired, and other machines have been locked, while the one time-failed auto is bachelor once more. After processing, like deleting Key, the lock that does non vest to the failed machine is removed. Therefore, the deletion requires judgment. But when the value is equal to the previously written i can the lock be removed. Currently, Redis does not take such a command, and then it unremarkably uses Lua.

When the value is equal to the value in the engine, Key is deleted past using the CAD command "Compare And Delete." For open up-source CAS/CAD commands and TairString in Module grade, delight see this GitHub page. Users can load modules directly to use these APIs on all Redis versions that support the Module mechanism.

When locking, we set an expiration time, such equally "five seconds." If a thread does non complete processing within the fourth dimension duration (for example, the transaction is still non completed later 3 seconds), the lock needs to be renewed. If the processing has not finished earlier the lock expires, a mechanism is required to renew the lock. Similar to deletion, nosotros cannot renew directly, unless the value is equal to the value in the engine. Merely when the lock is held by the electric current thread tin we renew it. This is a CAS operation. Similarly, if there is no API, a new Lua script is needed to renew the lock.

Distributed locks are non perfect. As mentioned above, if the locked machine is lost, the lock is held by others. When the lost machine is suddenly bachelor again, the code will not judge whether the lock is held by the current thread, maybe leading to reenter. Therefore, Redis distributed locks, as well as other distributed locks, are not 100% reliable.

Summary

- CAS/CAD is an extension of Redis String.

- The implementation of distributed locks

- Renewal through CAS

- For more details, please visit the Certificate Center

- For open-source CAS/CAD and TairString in Module form, please meet this GitHub page.

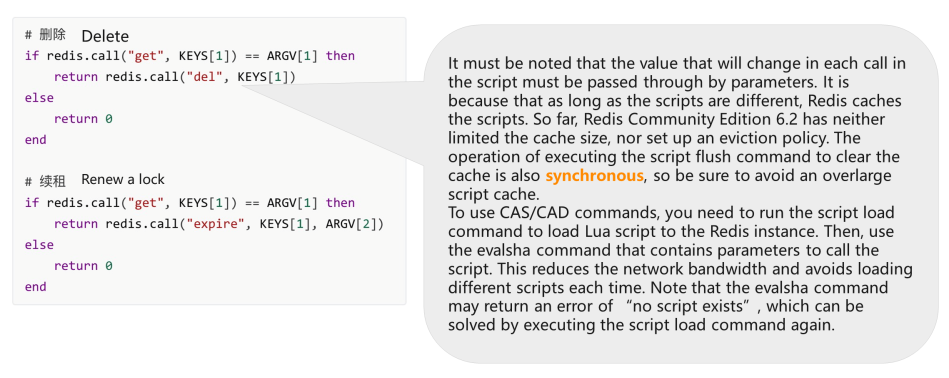

2) Lua Implementation of CAS/CAD

If there is no CAS/CAD command available, Lua script is needed to read the Primal and renew the lock, if two values are the same.

Notation: The value that will change in each call in the script must be passed through by parameters because as long as the scripts are different, Redis caches the scripts. So far, Redis Community Edition 6.2 has neither limited the cache size nor fix an eviction policy. The performance of executing the script flush control to clear the cache is also synchronous, so be certain to avoid an overlarge script enshroud. The characteristic of asynchronous cache deletion has been added to the Redis Community Edition by Alibaba Cloud engineers. The script affluent async control is supported in Redis half-dozen.2 and later versions.

You need to run the script load command to load the Lua script to the Redis instance to use CAS/CAD commands. And so, employ the evalsha command that contains parameters to telephone call the script. This reduces the network bandwidth and avoids loading dissimilar scripts each time. Note: The evalsha command may render an mistake of "no script exists," which can be solved by executing the script load command again.

More information about the Lua implementation of CAS/CAD is listed below:

- Distributed locks are not so reliable regarding the data consistency and reanimation recovery capabilities of Redis.

- The Redlock algorithm proposed past the author of Redis is notwithstanding controversial. For information about the Redlock algorithm, please run into reference one, ii, and 3

- Other solutions (like ZooKeeper) can be considered if higher reliability is necessary (higher reliability and lower performance.)

- Employ message queues to serialize a mutually exclusive operation based on the business organisation organisation.

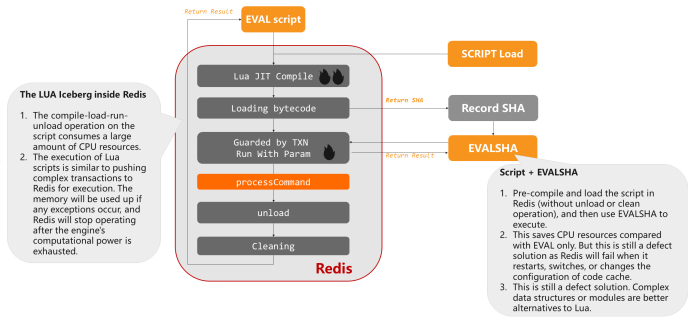

iii) Lua Usage in Redis

Earlier executing the Lua scripts, Redis needs to parse and translate the scripts showtime. Generally, Lua usage is not recommended in Redis for two reasons.

First, to use Lua in Redis, you need to call Lua from C language and and then call C language from Lua. The returned value is converted twice from a value compatible with Redis protocol to a Lua object and and then to C language data.

Second, the execution process involves many Lua script parsing and VM processing operations (including the memory usage of lua.vm.) So, the time consumed is longer than the common commands. Thus, unproblematic Lua scripts like if statement is highly recommended. Loops, indistinguishable operations, and massive data access and conquering should be avoided as much as possible. Remember that the engine merely has one thread. If the majority of CPU resources are consumed past Lua script execution, there volition be few CPU resources available for business command processing.

Summary

- "The LUA Iceberg inside Redis"

The compile-load-run-unload operation on the script consumes a large corporeality of CPU resources. The execution of Lua scripts is similar to pushing complex transactions to Redis for execution. The memory will be depleted if whatsoever exceptions occur, and Redis will stop operating subsequently the engine'due south computational power is wearied.

- "Script + EVALSHA"

If nosotros pre-compile and load the script in Redis (without unload or clean operation) and use EVALSHA to execute, this saves CPU resources compared with EVAL only. However, this is still a lacking solution as Redis will fail when it restarts, switches, or changes the configuration of the code enshroud. The code cache needs to exist reloaded. Complex data structures or modules are meliorate alternatives to Lua.

- When applying the JIT technology to the storage engine, call back that EVAL is evil. Try to avoid using Lua since information technology consumes memory and computing resource.

- Many advanced implementations of some SDKs (such as Redisson) utilize Lua internally. Therefore, caution must be taken as it is possible for developers to run into the CPU operation storm with no intention.

3. Redis Snap-Up System Instances

one) Characteristics of the Snap-Upward/Flash Sale Scenario

- Wink sale means to sell specified quantities of commodities at a special offering for a express time. This attracts a big number of buyers, simply unfortunately, only a few of them place orders during the promotion. Thus, a flash sale volition generate dozens (or hundreds) more than visit and society request traffic instances within a curt fourth dimension.

A flash sale scenario is divided into iii phases:

- Before the Promotion: Buyers refresh the commodity details page continuously. The traffic of requests for this page spikes.

- During the Promotion: Buyers place orders. The number of club requests reaches a meridian.

- After the Promotion: Buyers that have placed orders continue to query the status of orders or abolish orders. Nigh buyers keep refreshing the commodity details page, waiting for opportunities to place orders once other buyers abolish their orders.

2) General Method to Dealing with Snap-Up/Wink Sale Scenario

Snap-upwards/flash auction scenarios fundamentally concern highly concurrent reading and writing of hot spot data.

Snap-upwards/flash sale is a procedure of continuously pruning requests:

- Step 1: Minimize user reading/writing requests to the application server through client interception

- Pace two: Reduce the number of access requests that applications transport to the server for the backend storage system through LocalCache interception on the server

- Footstep 3: For requests sent to the storage system, employ Redis to intercept the vast majority of requests to minimize access to the database

- Step 4: Send the concluding requests to the database. The awarding server can besides use a bulletin queue to get a backup programme in instance the backend storage system does not respond.

Basic Principles

1. Less Data (Staticizing, CDN, frontend resource merge, dynamic and static data separation on page, and LocalCache)

Reduce the page demand for dynamic parts in every way possible. If the frontend page is mostly static, employ CDN or other mechanisms to prevent all requests. Thus, requests on the server volition be reduced largely in quantity and bytes.

2. Short Path (Shorten frontend-to-end path as much every bit possible, minimize the dependency on different systems, and support throttling and degradation.)

In the path from the user side to the final stop, you should depend on fewer business systems. Bypass systems should reduce their competition, and each layer must support throttling and degradation. After throttling and degrading, the frontend prompts optimization is needed.

3. Single Point Prohibition (Achieve stateless application services scaling horizontally and avert hot spots for storage services.)

Stateless calibration-out must be supported everywhere in the service. Stateful storage services must avoid hot spots, generally some reading and writing hot spots.

Timing of Inventory Deduction

- Deduction during Order Placing: Avoid malicious non-payment orders and negative inventory when loftier-concurrent requests are sent

- Deduction during Payment: Avert negative experience due to payment failure afterwards placing orders

- Pre-Deducting and Releasing When Timeout: This can exist integrated into frameworks (like Quartz) with attention paid to security and anti-fraud.

The 3rd method is commonly selected, as the kickoff two all have defects. For the first one, it is difficult to avoid malicious orders that do not pay. For the second ane, the payment fails considering of insufficient inventories. And then, the experience of the former two methods is very poor. Usually, the inventory is deducted in advance and will be released when the order times out. The TV framework volition be used, and a security and anti-fraud mechanism will besides be established.

Common Implementation of Redis

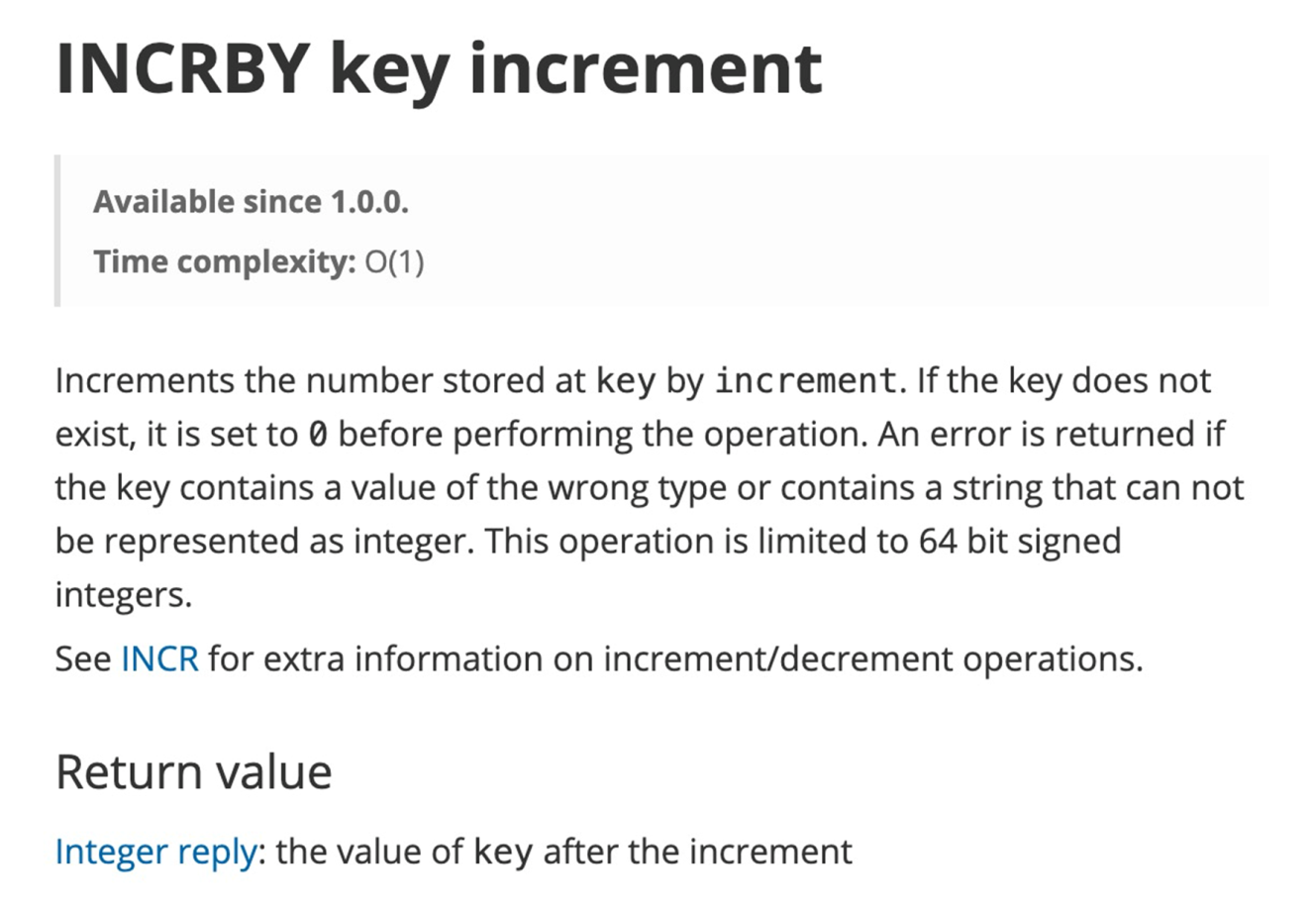

1. String Structure

- Use

incr/decr/incrby/decrbydirectly. Note: Currently, Redis does non support upper and lower bound limits. - Lua is available to avoid negative inventory or sku deduction of associated inventories.

two. List Structure

- Each commodity is a List, and each Node is an inventory unit.

- Use the

lpoporrpopcontrol to deduct inventory until nil (fundamental not exist) is returned.

Listing structure has some disadvantages, for example, more than memory is occupied. If multiple inventory units are deducted at a time, lpop needs to be called multiple times, which volition affect the performance.

3. Ready/Hash Structure

- Applicable to deduplication. To restrict user purchase quantity, employ

hincrbyto count andhgetto judge the purchased quantity. - Note: You need to map the user UIDs to multiple keys for reading and writing. You must not put all UIDs in one primal (hot spot) since typical reading and writing bottlenecks of hot primal will directly cause business concern bottlenecks.

iv. (If Service Scenarios Allow) Multiple Keys (key_1, key_2, key_3...) for Hot Bolt

- Random selection

- User UID mapping (different inventory can also be set co-ordinate to user levels.)

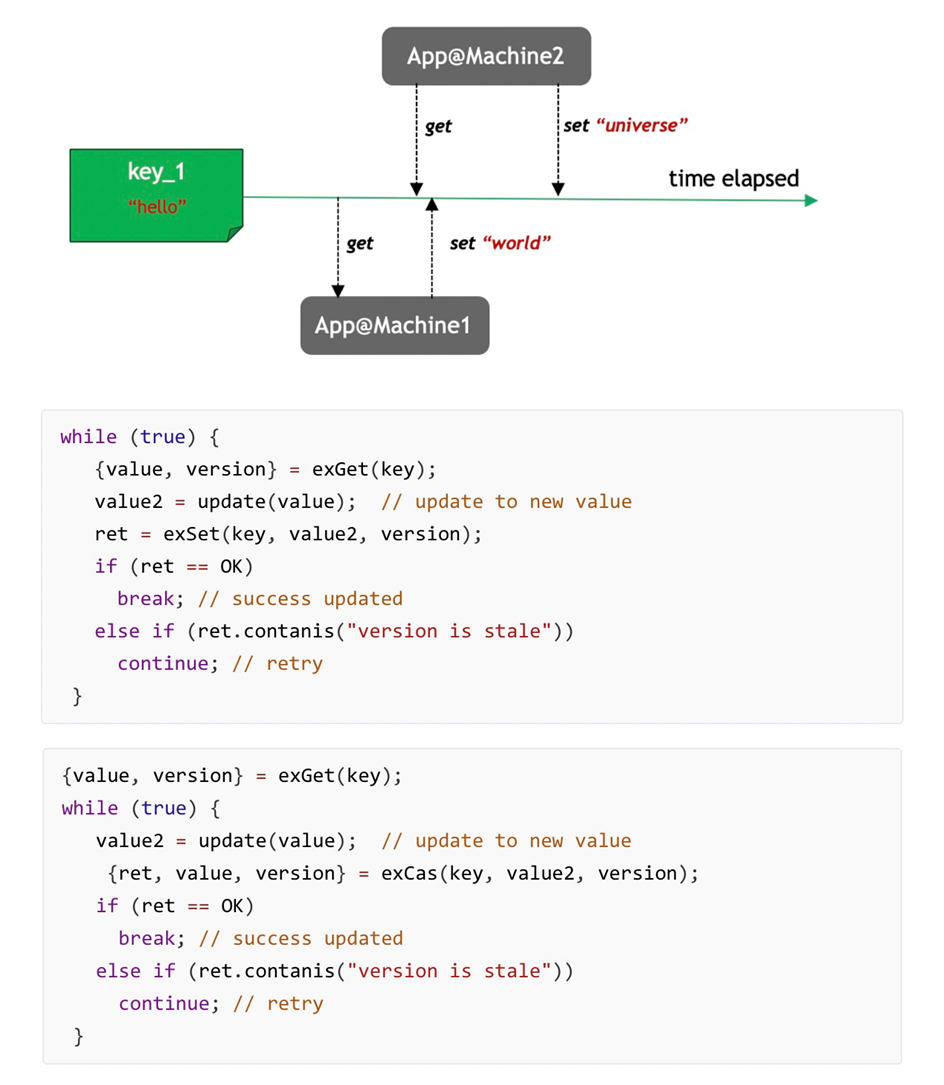

iii) TairString: String That Supports High-Concurrency CAS

TairString, another structure in the module, modifies Redis String and supports String with loftier-concurrency CAS and Version. Information technology has Version values, which enable the implementation of optimistic lock during reading and writing. Annotation: This String structure is different and cannot be used together with other common String structures in Redis.

As shown in the above effigy, when operation, get-go, TairString gives an exGet value and render (value,version). And then, it operates on the value and updates with the previous version. If versions are the aforementioned, then update. Otherwise, re-read and modify before updating to implement CAS operations. This is chosen an optimistic lock on the server.

For the optimization of the scenario mentioned above, yous can use the exCAS interface. Similar to exSet, when encountering version disharmonize, exCAS will return the version mismatch mistake and updated version of the new value. Thus, some other API call is reduced. By applying exCAS later exSet and performing exSet -> exCAS again when the API call fails, network interaction is reduced. Thus, the access book to Redis will be reduced.

Summary

TairString is a string that supports high-concurrency CAS.

Version-Carried String

- Ensure the atomicity of concurrent updates

- Implement updates and optimistic lock based on Version

- It cannot exist used together with other common String structures in Redis.

More Semantics

-

exIncr/exIncrBy: Snap-upward/flash auction (with upper and lower bounds) -

exSet -> exCAS: Reduce network interactions - For more details, please visit the Document Center

- For open-source TairString in module form, please meet this GitHub folio.

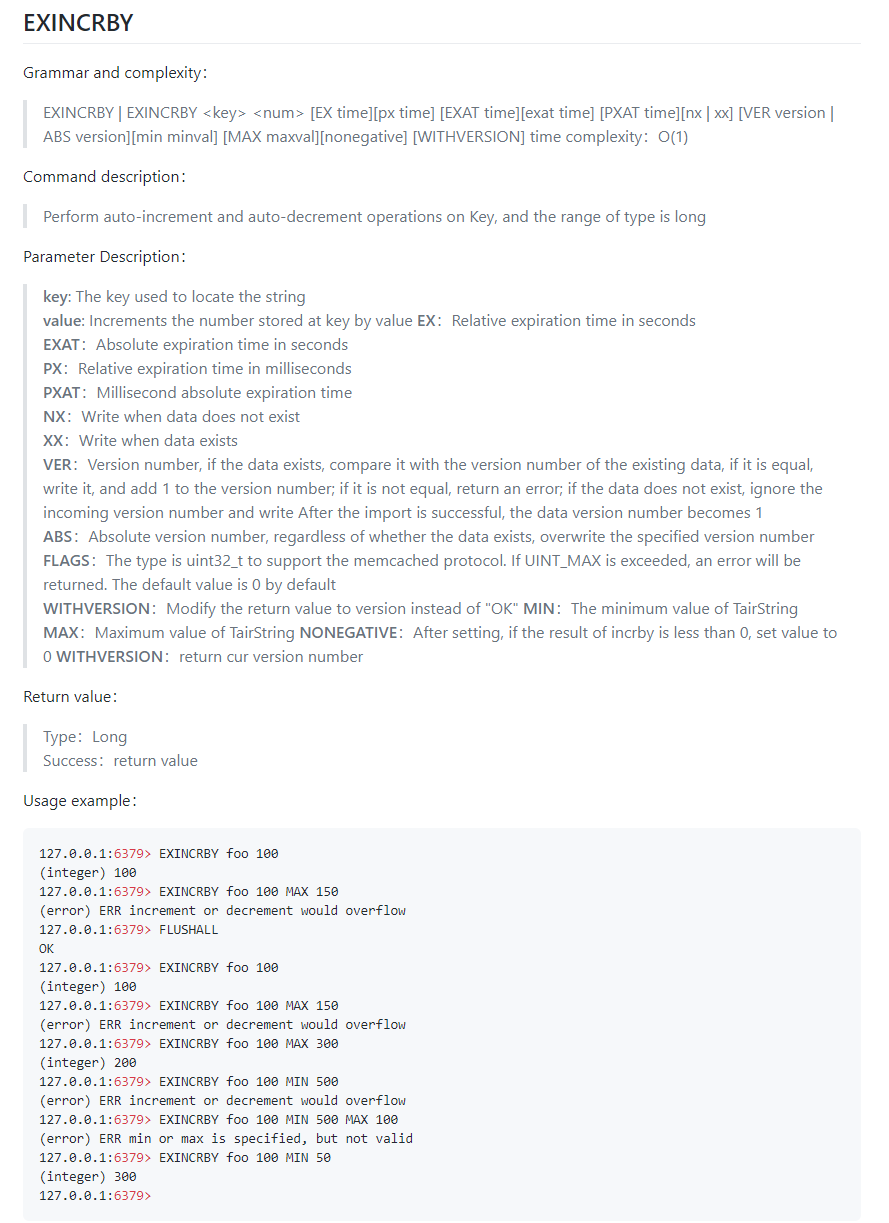

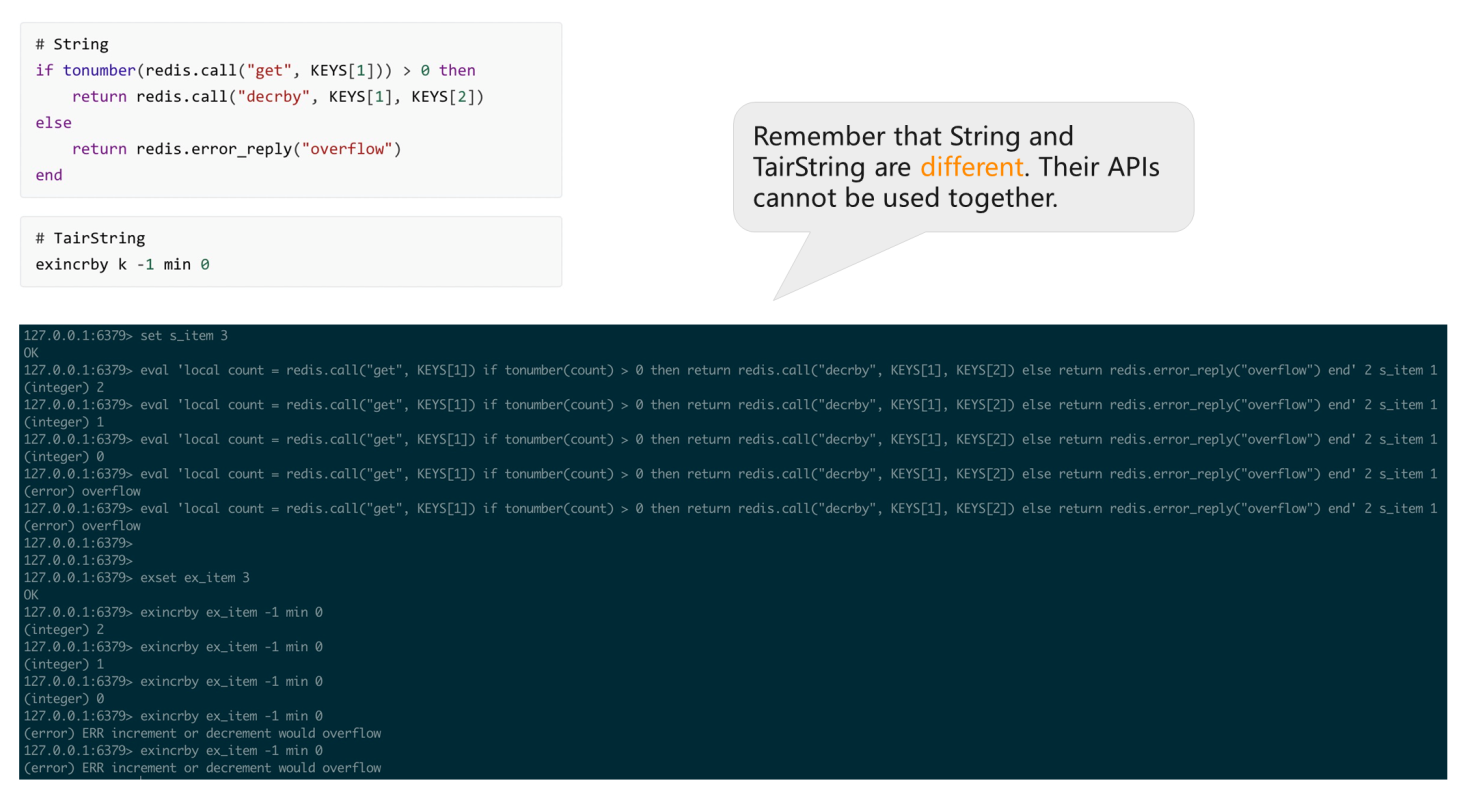

4) Comparisons between Cord and exString Atomic Count

The Cord is based on the INCRBY method with no upper or lower bound, and the exString is based on the EXINCRBY method that provides various parameters together with the upper and lower bounds. For case, if the minimum value is set to 0, the value cannot be reduced when it equals 0. exString also supports specifying expiration fourth dimension. For example, a product can just be snapped up within a specified catamenia and cannot after the expiration. The business organisation also restricts the enshroud to clear it after a specified time. If the inventory is express, the goods are removed after ten seconds if no order is placed. If new orders continue coming, the cache is renewed. A parameter needs to be included in EXINCRBY to renew the cache each time INCRBY or API is chosen to achieve this. Thus, the hit charge per unit can be improved.

What Is the Function of Counter Expiration Time?

- A article can be snapped up within a certain flow and cannot after.

- If the cache inventory is limited, commodities with no orders will expire and be deleted. If a new order is placed, the cache will be renewed automatically for a period to increase the cache striking rate.

As shown in the post-obit effigy, to use Redis String, the Lua script displayed above is suitable. If the "become" Key[1] is larger than "0," utilize "decrby" minus "one." Otherwise, the "overflow" error is returned. Value that has been reduced to "0" cannot be decreased. In the following case, ex_item is set to "three." Decrease it 3 times and render the "overflow" error when the value becomes "0."

exString is very simple in use. Users only need to exset a value and execute "exincrby thou -1." Think that String and TairString are dissimilar. Their APIs cannot be used together.

-

-

ApsaraDB

167 posts | xiv followers

Follow

You may likewise similar

Comments

-

-

ApsaraDB

167 posts | xiv followers

Follow

Related Products

-

ApsaraDB for Redis

ApsaraDB for Redis A key value database service that offers in-retentivity caching and high-speed access to applications hosted on the cloud

Larn More than -

ApsaraDB for HBase

ApsaraDB for HBase ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% uniform with the community edition of HBase.

Learn More than -

ApsaraDB for OceanBase

ApsaraDB for OceanBase A fiscal-grade distributed relational database that features loftier stability, high scalability, and high operation.

Learn More -

ApsaraDB for Cassandra

ApsaraDB for Cassandra A database engine fully compatible with Apache Cassandra with enterprise-level SLA assurance.

Larn More than

Source: https://www.alibabacloud.com/blog/high-concurrency-practices-of-redis-snap-up-system_597858

0 Response to "Redis Pings Then Expire Over and Over Again"

Enregistrer un commentaire